Why do we need orchestration in a data architecture ?

Orchestration for data pipelines

Published on : March 25, 2025

| Lastly edited on : March 25, 2025

| 8 minutes read

Lirav DUVSHANI

An orchestration tool is arguably the most important part of a data architecture, as it is the tool ensuring everything is done in the correct order.

The orchestration tool provides an easy way to orchestrate all the executions and to implement Directed Acyclic Graph, also known as DAG.

First, let's define DAGs.

What are DAGs (Directed Acyclic Graphs) ?

Let's consider a real life example : planning a wedding and let's consider 2 goals : enjoying your wedding day and enjoying your honeymoon.

All the tasks have to happen in a certain order, and there's no looping back :

- You can send invitations only after having decided who to invite

- You should set up the venue only before having the wedding day party

- Certain tasks can be done in parallel, such as buying of all the decorations, the search and booking of a caterer, of a photographer and sending invitations

A DAG consists of :

- Node(s)

- In our example above, the nodes are tasks such as choosing a date for the party or planning the honeymoon

- Edge(s)

- In our example above, the edges are the 1-to-1 dependencies between nodes, such as the fact that deciding who to invite needs to be done after choosing the venue for the party

- No directed cycles present in the graph

- In our example above, we cannot have a loop between nodes. For instance, you cannot decide once again who to invite after having sent out the invitations

These principles are fundamental in orchestrating data processes.

For a more theoretical explanation of DAGs, you can have a look at the Wikipedia page

DAGs for data processes

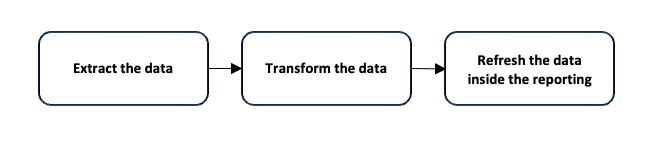

A simple data process - Only sequential

Let's consider a simple example first, the sequential execution of 3 jobs : a data extraction job, a transformation job and the refresh of a visualisation based on the transformed data

In this example, we ensure the latest data is inside the reporting, we need to first extract the data, then transform it and only then refresh the data in reporting.

If we look at it starting from the end result, to ensure that the data available in reporting is the most up-to-date, the refresh data task for the reporting should be executed every time the previous task applies an update, which happends whenever the data transformation task completed.

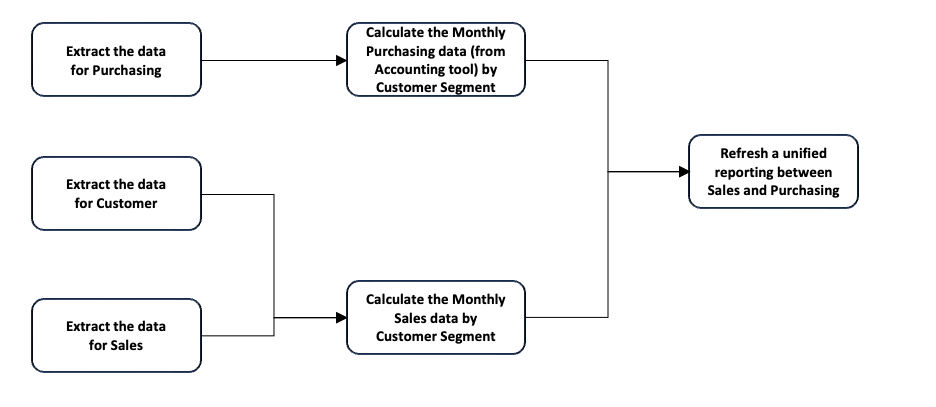

A more complex data process - Sequential and Parallel

Let's consider a more complex example, involving both sequential and parallel execution of jobs.

In this example, a lot more is happening...

3 data sources need to be extracted and each is used in different calculations.

The customer and sales data are needed to calculate the monthly sales data by customer segment, meaning the extraction of these data must precede this calculation.

This DAG shows the dependencies between tasks but does not define the exact execution logic.

Considering the calculation of monthly Sales data, which has 2 predecessors, we have many possibilities :

- Should we plan the update of the monthly sales data everytime the customer or sales data is extracted ?

- Should we plan the update of the monthly sales data every day once the customer and sales data is extracted ?

- Should we plan the update of the monthly sales data every month on the 3rd of the month, regardless of the customer and sales data extraction ?

This is where we need to provide not only the DAG but also the triggering rules

Triggers

To automate task execution, we use triggers, which are conditions that determine when a task starts.

The types of triggers are :

- Time-based triggers : Define in advance the time the trigger should be activated. For example, every Monday at 3.a.m. or every day at 10.p.m.

- Event-based triggers : Using a condition on an event to activate the trigger. For example, waiting for the success of another task or waiting for the availability of a file at a specific location.

Many tools have also specific triggers implemented like : Webhook triggers, Realtime triggers, File Existence triggers, ...

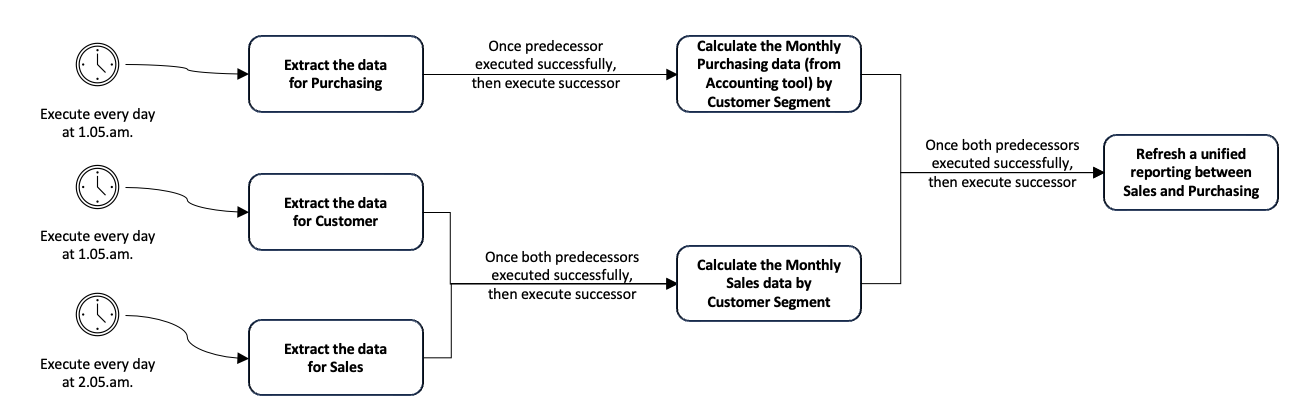

Example of DAG with trigger

Using our previous example with sequential and parallel execution, we add triggers to enforce a specific logic.

Here, the goal is to refresh daily the unified reporting once all the data is updated.

To meet our goal of daily refresh of the unified reporting, we introduced 6 triggers :

- 3 triggers on the extract tasks

- Each of the extract task has been assigned a time-based trigger to execute daily at a specific time in the day

- 2 triggers before the calculation tasks

- Each of the calculation task has been assigned an event-based trigger to execute the task only when the predecessors have completed successfully

- 1 trigger before the refresh of the unified reporting

- The refresh task has been assigned an event-based trigger to execute the task only when the predecessors have completed successfully

Now that we understand DAGs and Triggers, let's deep dive into what orchestration tools have to offer

Orchestration tool for data processes

Orchestration tools are the answer to the automation, management and monitoring of workflows. In most orchestration tools, workflows are structured as DAGs.

In some cases, although more on the exception side, there can be cycles inside the workflows and in this case, the workflows will be directed graphs.

The key features of orchestration tools are :

- Workflow definition : Enabling the creation and structuring of workflows including the internal dependencies between tasks

- Scheduling : Enabling the automation of scheduling at specific times or based on trigger conditions

- Monitoring & Alerting : Enabling the monitoring, logging and alerting on warning/failures

- Easy monitoring : Global view of all the executions done, usually with a UI available by users

- Automated detection of failures : Reception of emails to relevant teams when issues occur

- Centralized logging : All the log is available in a single location

- Scalability : Enabling easy scalability with the management of high volume of execution in parallel of workflows and triggers

Different types of orchestration tools

Orchestration tools differ based on several factors :

- License type : Open-Source, Commercial, Hybrid (Open-Core)

- Deployment Model : On-Premise, Cloud-Native, Hybrid

- Workflow Definition Approach : Code-Based, Configuration-Based, UI-Driven

- Execution Mode : Batch Processing, Real-Time Streaming

- Scalability & Distribution : Single-Node Execution, Distributed, Kubernetes-Native

- Primary Use Case : ETL, ELT, Data Warehousing, Reverse ELT, Machine Learning Pipelines, DevOps, Business process automation

Conclusion - When to make the switch to an orchestration tool ?

When launching a data initiative, the orchestration tool is not the first thing to implement in the data platform. Whether it's prototyping a new data product, creating a simple project with a very few number of jobs, it might not be that important to orchestrate. Some native time-based triggers like crontab can be sufficient for this need.

But as soon as the volume of tasks increases and the underlying dependencies between them grow more complex, the need for an orchestration tool becomes apparent. Time-based triggers like crontab quickly become brittle in the face of interdependent jobs, retries, failures, or dynamic task generation. Managing dependencies manually not only introduces the risk of silent failures but also makes your system harder to maintain and scale.

Orchestration tools bring structure, reliability, and visibility to your data workflows, enabling teams to move faster with confidence as systems grow in complexity.

Still, when confronted with the choice of an orchestration tool, all the tools offer different capabilities tailored to different use cases. Choosing the right tool depends on factors like workflow complexity, scalability needs, and integration capabilities. Whether you're automating ETL pipelines, managing real-time data streams, or orchestrating machine learning workflows, selecting the right orchestration tool will be key to building efficient and reliable data infrastructure.