How to build a Scalable Open Source Data Platform - Sopht - Project Plan

Sopht Data Platform Implementation - Project Plan

Published on : April 2, 2025

| Lastly edited on : April 2, 2025

| 12 minutes read

Lirav DUVSHANI

Series : How to build a Scalable Open Source Data Platform - Sopht - Project Plan

Sopht Data Platform Implementation - Project Plan

Sopht Data Platform Implementation - New Architecture

Kestra's success story at Sopht

How to ensure quality in the development of data pipelines

How to empower data stewards with a dedicated data quality reporting

Project Plan

Sopht - A Green ITOps Solution

Sopht is a French start-up building a unique Green ITOps data-driven solution to lead environmental and financial performance with automated decarbonization recommendations & guided actionability. Here's how the solution works :

- Automated data collection : Automated and streamlined data collection across the entire IT value chain covering SaaS, hardware, usage, Cloud & On-Prem, networks, web, and more.

- Granular & dynamic analysis : Real-time analysis of Scope 2 and 3 emissions with methodology based on LCA, GHG Protocol, ADEME, ISO...

- Suggestions & simulations : Recommendations from the solution and simulation of the impact of the decarbonization levers.

- Distributed steering : Capacity to build, assign and track actions plans and closely monitor the real-time impact of these actions on the decarbonization trajectory.

As you can imagine, Data is at the core of the software provided by Sopht.

Context

At the start of 2024, after a successful seed round, Sopht needed to ensure the scalability of their solution to ensure both the increase in the customer base (increase in number of customers) but also in the features provided to customers.

In this context, we audited the current state of the software architecture, mainly its data architecture and proposed a target architecture including key improvements. Our evaluation identified potential bottlenecks, inefficiencies, and areas for optimization to enhance scalability and performance.

How was this Platform built ?

The initial proposal for target architecture was a complete redesign of the calculation "core" module, which requires the migration of all the data pipelines. Before initiating the redesign project, it was necessary to develop a plan.

This plan needed to consider multiple elements :

- The company could not simply stop adding new features and correcting anomalies

- All the customers were actively using the application and thus any impact on the reporting would be visible to customers

- The quantity of data pipelines to migrate was considerable

- This was the opportunity to add improvements in the methodology of the various emission calculation already implemented, so taking the time for testing would be very beneficial

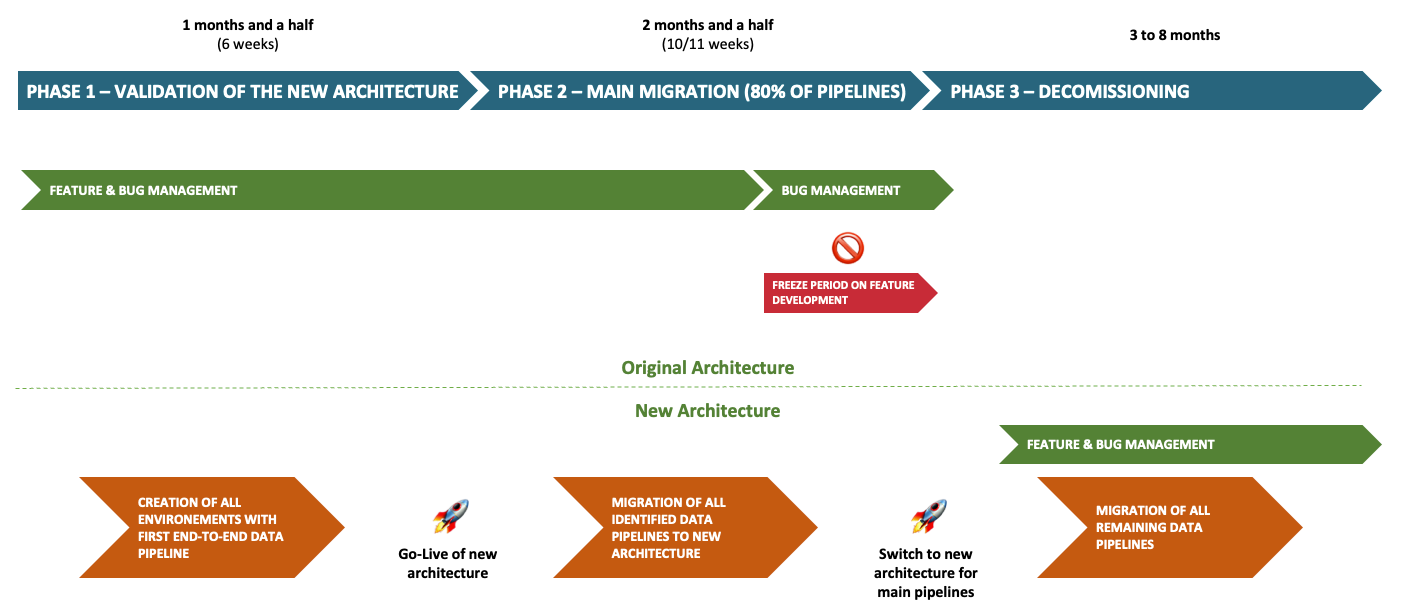

Considering all of this, a global plan for migration was implemented with 3 main phases :

- The validation of the new architecture with the deployment of all the environments and validation of the first end-to-end data pipeline

- The migration of the most important data pipelines (about 80% of the data pipelines)

- The migration of remaining data pipelines and the decommissioning of the original data platform

| Phase Order | Phase 1 | Phase 2 | Phase 3 |

|---|---|---|---|

| Phase Name | New architecture validation | Main migration to new architecture | Final migration to new architecture and decommissioning |

| Duration (in months) | 1 month and a half | 2 months and a half | 3 to 8 months |

| Customer facing platform during phase | Original Platform | Original Platform | New Platform |

| Original Platform | ✅ Active | ✅ Active | ✅ Active To be decommissioned |

| New Platform | 🚧 Under Construction | ✅ Yes | ✅ Yes |

| Bug Management | Original Platform | Original Platform | Original and New Platform |

| Feature Change/Addition | Original Platform | Original Platform with a 🚫 freeze period at the end | New Platform |

- First, the creation of the new architecture with all the expected environments (development, staging and production)

- The goal of this first phase was to validate the new architecture implementation

- The duration of this phase was to be 1 month and a half long

- During this phase, the current architecture is to be maintained as is and the logic is a "business-as-usual"

- Second, once the new architecture is validated with all the expected environments deployed with the first data pipelines, the migration of most of the sensible data pipelines (about 70/80% of the pipelines) can be started

- The goal of this first phase was to migrate the most sensible data pipelines

- The duration of this phase was to be 2 months and half long

- During this phase, any features that needed to be provided to the customer before the end of this phase, needed to be included in both old and new architecture

- At the end of this phase, a freeze period still needed to be planned to ensure no features were added last minute

- Third, all the remaining data pipelines can be moved to the new architecture little by little in parallel of product features and anomalies management

- The goal of this phase was to have a single architecture and to decommission all no longer used tool and database, like MongoDB and the initial PostgreSQL database

- The duration of this phase could be 3 to 8 months depending on the resources and priority given

This situation requires a dual run, where after the first phase completes, both old and new architecture are still being loaded. Although, it requires more resource to maintain, this proposal prevented a total big bang situation where the migration to the new scalable architecture required 7 to 8 months.

What did actually happen ?

As in so many data projects, we encountered a few bumps in the road, but we made it.

Among all the bumps we encountered, the main ones were :

- Some unexpected technical complexity to ensure proper performance (when using DuckDB and PostgreSQL for very high volumetry)

- The testing phase was a lot more complex than anticipated with a high friction between intended changes in the methodology and issues in the migration.

Thus, the 🚀 go-live of the new data platform with most of the platform implemented (end of phase 2) was done 5 months after the start of the project, with a single month of delay from the original plan.

How did we measure this project success ?

Finance KPIs

- Direct Reduction of Cost : Reduction of 60% of cost on Raw data Storage / Bronze Layer, by replacing MongoDB for all high volume data sources to S3 Parquet

- Optimization of Cost : Potential to reduce costs while doing the complete migration from MongoDB to S3. Expected reduction of cost due to MongoDb decommissioning : An additional 80% reduction on the remaining cost

Production KPIs (User Related KPIs)

- UI response time : Less than 5 seconds for initial load and less than 1 second upon selection (down from more than 10 seconds on some specific queries)

- Number of Daily Kestra jobs : 1 240 in the first weeks after the go-live

- Average Kestra job Success Rate : 98,12%

- Data Volume Stored : More than 2 To

- Anomaly Root Cause Analysis (average estimated time) : 30 minutes (down from hours or even days before)

Code KPIs

- Number of lines of python code : 49 000

- Number of lines of SQL code : 4 850

- Number of lines of Kestra yaml : 10 000

- Number of unit tests : 205 ; with a coverage of the python code of about 91%

Project Feedback

C-level takeaway

The CTO's feedback (Gautier Levert) : "The move to the new data architecture provided serenity and efficiency for the team for today and for the future. On top of that, we have the ability to detect and correct bug under half a day, instead of 2 days to 2 weeks in the original architecture, which was one of the most painful points. "

The CPO's feedback (Julien Rouzé) : "The change brought by DataFlooder was done with serenity in the approach and in the scoping of the mission. Our data needs and expectations linked to our business requirements were understood and the data stack design and built met them and enables us to be ready to scale."

Developers team takeaway

Every new feature or change in development is a lot easier to define, as well as any topic to analyze. Every topic can be managed with more serenity.

The time spent on debugging and explaining results has been reduced drastically.

Each new data engineer in the team now finds the necessary information in less than a day and becomes effective member in less than a week.

Most of the time is now spent on value added tasks.

Product team takeaway

All the analysis are just simple in the new architecture. Finding the right place of the data comes naturally now.

DataFlooder takeaway

A technical migration is rarely only technical, even more when changes in feature behavior are planned from the start. As it usually is the case, the involvement of the Product team revealed to be critical to the success of the transition to this new platform. And in the end, this project was a success mainly due to the high involvement of both Tech & Product teams.

What are the next steps to improve the data platform and culture ?

Now that the data platform is up and running and matching the first business need, there is still room to improve development and testing efficency and increase the empowerment of the data users.

Among the steps that can be added to the initial data platform design, some ideas have been thought of :

- Phase 3 of the migration

- Decommissioning of MongoDB : Finalize the transition to S3 to unify the platform

- Migration of the last data flows : Migrate to the new data platform to enable the decommissioning of the entire initial calculation module

- Data Quality Gate : Provide detailed insights on the data quality

- Data Freshness Report : Provide information about the current data freshness for all the sources of data

- FinOps report : Monitor the cost of the data platform including all the different tools

- Automation of all remaining manual deployment tasks : Increase the stability and reduce the time spend by developers on recurrent tasks

- Increase data explainability : Include additional information to facilitate explainability of calculation in the customer facing reporting

- Adoption of a data centric governance : Implementing a governance strategy rooted in data and strengthening governance with a data-first mindset

This article is part of a series showcasing the design and implementation of a scalable open source Data Platform for Sopht, a French Green ITOps start-up.